Chroma is an innovative sensory interaction designed for users to experience the interlinking of sound and colour - a concept known as chromesthesia. The intended context is in an art gallery, where users are more likely to be in a more relaxed and contemplative mindset.

When interacting with the installation the user must first enter a dark room where they are presented with headphones, some pens, and a drawing canvas mounted on an easel. With the headphones on, the user selects a pen and begins to draw on the canvas. As they draw sound is created which reflects their drawing. In this way users are not only creating a visual drawing but also a related audio drawing. Specifically this involves manipulating pitch by changing the gradient and audio volume through the amount that a particular colour appears on the board. This way the users enjoy a unique interaction with sound as they draw, and also throughout the drawing experience.

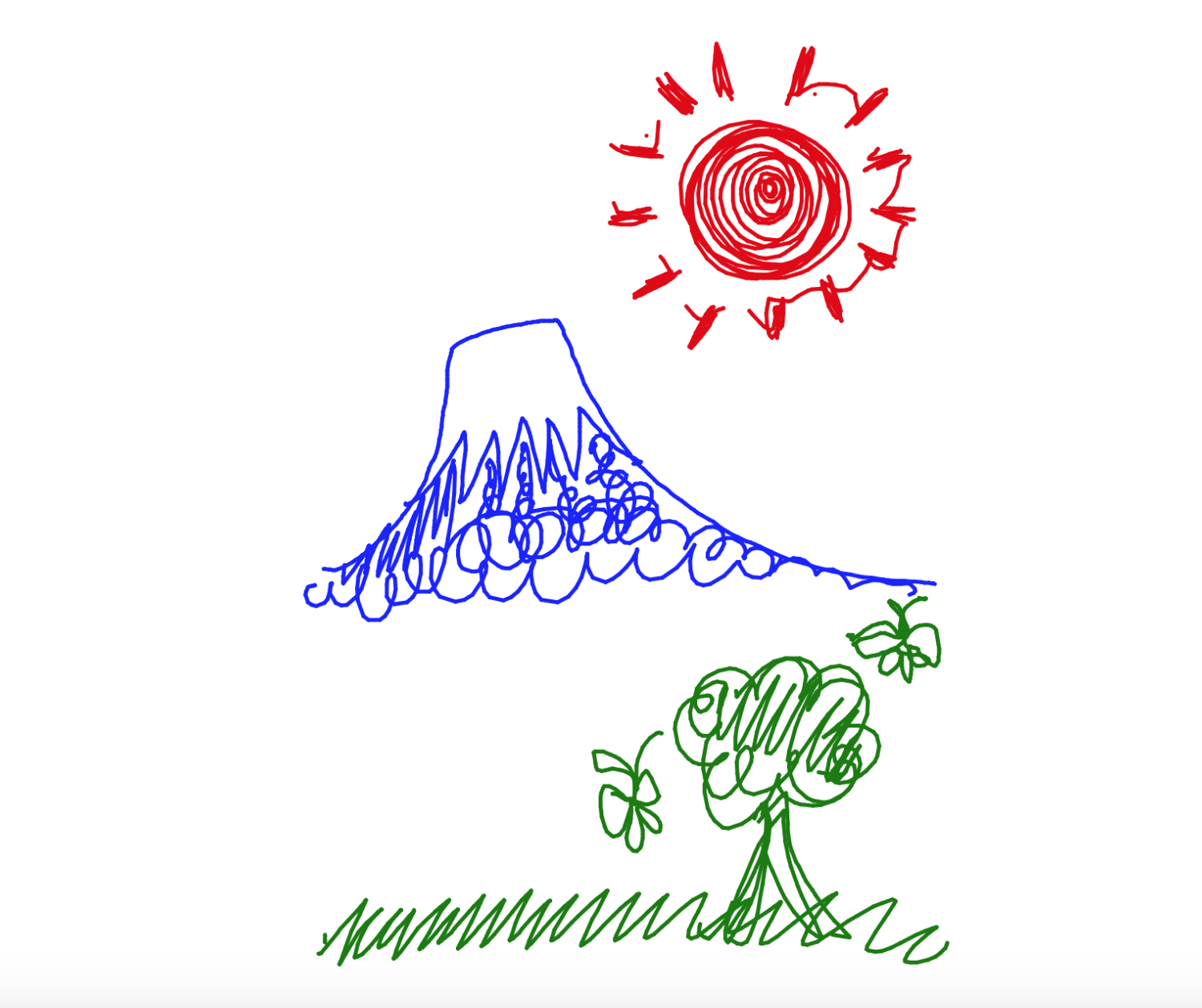

All colours are assigned unique sounds related to real world things, specifically: birdsong for green, fire for red, and the sound of a river for blue. Our intention is that these different colour-sound associations will help to create a playful interaction and encourage users to rely on their hearing rather than their sight when drawing their picture. If a user hears birdsong when they draw, they may be more inclined to draw birds singing.

At the end of the interaction, users are able to take their drawing with them, further encouraging them to share their experience with others and to reflect on their own experience with chromesthesia. Chroma is also designed to be friendly to the visually impaired. Since the experience relies more on a users ability to detect and hear sounds rather than visuals, a visually impaired individual should be capable of enjoying the same experience as a sighted individual.

Technical Description

The Chroma interaction utilises an infra-red touch conversion frame that detects the position of a pen tip touching the canvas. These touch coordinates are sent to a JavaScript application that has been developed to record and calculate the change in these coordinates over time – these coordinate changes allow for unique, distinct changes in sound. The following drawing-sound controls have been implemented in the Chroma application:

- Changing the drawing angle of a line changes the pitch of the current sound - creating two lines using four coordinates sets every 200 milliseconds. The angle difference between these lines are continuously summed over time to calculate the pitch value

- Increasing the volume of a specific sound heard when more of that colour exists on the drawing board - an array of unique coordinate values is updated as a user draws, the length of this array controls the volume

- Producing sound in the left or right audio channel exclusively based on drawing position on the canvas - using the existing coordinate array to sum the ‘y’ coordinate values then divide by the array length to generate an average ‘y’ position. The average position is used to divide sound between the left and right audio channels

To produce each sound, the JavaScript application utilises the Web Audio API which allows for sounds to be loaded and manipulated in various ways. The key methods and interfaces used in the Chroma interaction included the source interface (for loading each sound and assigning specific gain nodes), the node interface (for volume and channels) and the playback interface (for pitch and playback rate).

An Arduino Leonardo has been wired to a series of micro-controllers that are used to determine which colour is currently active during a drawing. The Arduino uses a HID keyboard to send unique key inputs to the central operating computing every time one of these micro-controllers is triggered by extracting a pen from the holder. These key inputs align with ‘key-down’ functions in the JavaScript application that change the colour of the line and sound.

Final Statement

In terms of exhibition outcomes we received about 80 drawings from users interacting directly with the canvas, and hundreds of people listening to drawings on our viewing set-up. In addition, the School of ITEE agreed to purchase our infrared frame from us, as we had been able to demonstrate the technology's usability. Finally, we were also invited to demo our installation at UQ Open Day on Sunday August 4th. All of these outcomes were great and the team was really pleased with all of them.

Our project received a very positive response from the majority of participants of our installation. Significantly for us, we had some users that were very passionate after leaving the installation and proudly showed off their drawing to their friends. This was a part of the intended interaction and so we considered the exhibition and overall success. One limit of our installation was that it was an individual experience, and as such only one person could use the installation at a time. This resulted in us having a long line of people waiting to use our installation, with a large number of people being unable to use it at all. We considered implementing a time limit for the interaction to allow more people to try it out, but opted agaisnt it, as we didn't want to make such a critical change to the interaction on the exhibition night.

When considering next steps for the project moving forward, we have two major goals. Most importantly, we need to rewire our Arduino and rebuild our pen holder contraption. This is necessary for us to exhibit successfuly again at Open Day. Secondly, the biggest limitation of our project was the limited range of colours we had included. As we only had 3 colours to use during the exhibit, this also meant we only had 3 sounds for users to play with. This resulted in the majority of user drawings sounding the same at the end of the interaction, despite being visually different. As ownership/customisation was a key aspect of our interaction, adding more colour options would result in a greater variation in the sounds produced by user drawings, thus enhancing the overall user interaction experience.